Video : ER/Studio Data Architect

Data Architecture Trends

From traditional data warehouses and data lakes to next-generation data platforms such as the data lakehouse, data mesh, and data fabric, a strong data architecture strategy is critical to supporting an organization’s goals. Greater speed, flexibility and scalability are common wish-list items, alongside smarter data governance and security capabilities. Many new technologies and approaches have come to the forefront of data architecture discussions.

Watch this video to learn more about the trends in data architecture.

The presenters are:

- Jamie Knowles is a Product Manager at IDERA.

- Sam Chance is a Principal Consultant at Cambridge Semantics.

- Tim Rottach is the Director of Product Marketing at Couchbase.

- Joseph Treadwell is the Head of Partner and Customer Success at TimeXTender.

Transcript

00:00

Modern Data Architecture

Our presentation today is titled Top Trends in Modern Data Architecture for 2023. Before we begin, I want to explain how I can be a part of this broadcast. There will be a question and answer session. If you have a question during the presentation, just type it into the question box provided and click on the submit button. We’ll try to get to as many questions as possible, but if your question has not been selected during the show, you will receive an email response.

01:21

IDERA Data Architecture Tools

Great. Thanks, Steven. Thanks very much. Okay, so let me introduce who IDERA is. I’m from the Data Tools division. We’ve got a whole raft of products around data management and analytics. For today’s presentation. The products that are relevant are WhereScape, which is a tool for automating the creation of static structures and data pipelines of a warehouse, all based on metadata. We’ve got Qubole, the cloud hosting for data lakes. We’ve got ER/Studio, a data modeling tool to document and design data assets and Yellowfin, which is a business intelligence tool. Today I’m going to focus on the data analytics space. We’re seeing a lot of change and a lot of innovation in this space. In this diagram that you can see here, we’ve got data sources over on the left and we’ve got data consumers on the right. Data sources will be our applications and databases, and we’ve got all sorts of files and pieces of data from the Internet of Things.

02:23

Over on the right we’ve got our consumers which might be BI tools like Yellowfin. There are modern knowledge graph tools like Cambridge Semantics going to talk about. The journey between them can be pretty complicated. On this diagram, we can see there are three possible journeys, others that are available. We might have a formal data warehouse that’s formally structured, we might have a data lake and we might have more modern data virtualization and streaming platforms. In between them, we might have a convoluted journey of movements, data pipelines, data fed by ETL tools, and ELT pipelines implemented in code. Now there’s a lot of complexity here. The first challenge is that all these tools and technology need to be configured and set up and run which needs deep skills and a lot of work to make it happen. There’s also the challenge of understanding well, what data is in all of this, and what does it all mean?

03:26

Data Governance

Where did it come from? If I’ve got a problem over on the right-hand side, why have I got a problem? Is it a problem at the source? Was there a data quality problem? Did something happen in one of those pipelines? Accessing this data is also part of trying to take advantage of the different technologies and paths it’s going to take because of this complexity. We’re seeing a lot of problems with things like data swamps and shadows. It is where people are creating their own little pools of data and keeping it themselves. That results in problems with things like governance. If you’ve got regulatory requirements like GDPR, understanding where personal data is throughout, can be quite a challenge. If you’re going to exercise a GDPR right to forget someone’s data, then crikey, where do we go in here to get rid of or find that data?

04:17

Data-Driven Enterprises

There’s a lot of complexity in the technology and the tools and we’re also seeing that there are disconnects and complexity with the people. There are various groups in the organization that claim responsibility for data and information. We’ve got the data analysis team, they’re producing the insights from all of that data. We’ve also got the data architects in the blue there. They’re designing and documenting data assets using models. Down at the bottom, we’ve got our data governance team that’s quite usual in the business rather than it. They’re very separate from the other two teams and they’re putting the meetings together for pieces of data and setting the rules around that information so they can be disconnected as well. There might be other groups like application developers and integration teams too. So what’s our mission? Really in this modern day and age, we want to get data to people as quickly as possible.

05:14

Data Sources

We want to be taking advantage, exploiting that data and getting value from it, monetizing it. We really want to get data from the source to target as quickly as possible. So we want a data supply chain. Think about an Amazon-type world where people can go and browse for data through a beautiful storefront. We’ve got a whole ecosystem that’s well connected with tools and people with automation that are going to get that data and get it to you as quickly as possible. Not only that, we’ve got governance taken care of, baiting throughout. We want to make sure that the right data is getting into the hands of the right people and not into the hands of the wrong people. Okay, so we’re going to protect our data. So how do we do that? We’re seeing two things that are really going to help with this data supply chain.

06:01

Data Fabric

The first thing is a data fabric. Now we’re seeing lots of definitions of this. Even if we’re confused about what a data fabric really is, the way we see it is pragmatic. In that chart that was looking at earlier, that journey from source to targets, we want to see lots of automation. With the data warehouse, for instance, we want to be able to automate the creation of the regions in the warehouse and the pipeline. This is where tools like WhereScape can really help. We’ve got Qubole for automating the data lake Yellowfin, which is producing insights in the data in the top right-hand corner. Then we got to the data storefront. That’s going to be built around a business glossary so people can come in and look for pieces of information. That’s going to be mapped to a data dictionary, a catalogue of all of the different assets on the diagram at the top, mapped to those business terms.

06:53

Data Lineage

We can see, well, where is our information? We know exactly where it is. We know the data lineage, where did it come from, and what happened to it along the way. So that’s an ecosystem of tools. We see a data fabric as really an architectural approach. We’d love to sell you all our products that can implement this, but we’ve got to work with lots of other tools as well and make them plug-and-play and interchangeable. That’s an important part of it. The next part is the people. We’ve got a notion of a data mesh that you might have heard about. This is an approach many talk about data mesh as being around decentralization. I think it’s more about the focus on products. We’re going to be able to provide data products that the business wants and provide it in a form that they can use so we can organize our people and that information into different domains around the organization that are independent.

07:46

Data Modeling

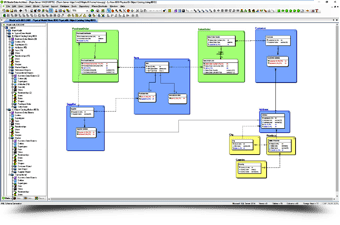

They’re going to work on what products they’re going to provide to their consumers and set the rules around those pieces of data. Just because they’re working in decentralized modes doesn’t mean they’re working in silos. We need some overarching framework that’s going to bring them all together. This is where models can come in and we help things out. In terms of this diagram here, over on the right, this is a traditional data modeling approach for our data assets. We’re going to create physical data models. Over on the right, we can see we’ve got a table in a database called Cast with a column called C to underscore RTNG. We’ll generally design and document that with logical data models in data modeling tools like ER/Studio, so you work with the business users, design the logical model and then translate it to a physical model now a similar model in the data governance world.

08:35

Enterprise Data Model

In a data catalog we can have two parts again, the technical data dictionary on the right-hand side with some model of the physical data asset. That now is going to be mapped to our business terms in our Business Glossary. Okay? Now what we really want to do is bring all those three worlds together because they’re semantically the same and try to unite the different understandings of the information. If we can do that, then we can start building out things like an enterprise data model. At the top, we’re going to have an enterprise conceptual model with a small number of objects. That is the information the organization really cares about. Below that we’ll have a standard single or a number of enterprise logical models with standardized entities representing things like customer and order. We can have standard attributes with standard data types. We’ll understand exactly how I uniquely identify a customer or an order.

09:33

Business Glossary

We’re going to have for all of our different data assets, logical and physical models that represent them all. If we bring in the business glossary, then we can connect the worlds together. Now things start to get interesting. I can now say, well, where is the credit rating score? I can see all the different models. I can come up from the bottom, see underscore RTNG, what does it mean? Tell me about the data type and the standardized version of it. Tell me about the rules that are associated with it in the Business Glossary which helps us govern it. Extrapolating that out, we’re going to have all of our application databases. We’ve got our NoSQL structures like Couchbase and MongoDB, we’ve got our Json messages, our data warehouse and our Bi reports all tied together with a common model, and our enterprise logical model all tied into the Business Glossary.

10:20

Data Infrastructure Automation

Now things get really exciting. This is where automation can now really kick in. We’re automatically designing a data warehouse so ER/Studio can pull information from Calibra and the data governance world and pass over a fully marked-up model of the data asset to Wescape, which can then automatically create security in the warehouse from that. So fully automated security. Okay? In summary, we want a well-governed data supply chain. In order to do that, we need a number of parts, the data fabric, so all our tools and technologies all work together with lots of automation. We need people to be able to work together in a data mesh focused on data products, providing the right products to the right people. To unite everything, we need an understanding of that information. Lots of metadata organized into structured models, either traditional data models hopefully, then also with the data catalog with the business Glossary tying everything together.

11:31

Data Architecture Best Practices

To forge this connectedness, there are a growing number of development and production projects that are more ontologically connected, with disparate data sources at the application or even the enterprise scopes. We’re going to see this trend expand to larger communities of interest and ecosystems. I’m going to overview some drivers, some technical enablers, and some ascendant data architectures.

13:05

To begin, people, places, things and processes in the real world are rapidly becoming digitized. The logical next step is to connect everything. The larger vision, however, is increased delegation and automation to automated systems and then increased autonomy of these systems and processes. Think business to business, for example, supply chains. Yes, for better or worse, many people want to connect humans to this ecosystem as well. It’s also known as convergence. So nothing says connected like Graph. It turns out that the Graph data model fits well for connecting and accessing digitized real-world objects. In fact, in our humble opinion, the killer application for the Graph data model is large-scale data integration. In other words, a Graph is a key enabler for large scope and scale connectedness. So the vision also requires data interoperability. To achieve the vision that we want to get, the data has to be interoperable and at scale.

14:22

Data Architecture Trends

There are defined levels of interoperability, and we’ve written about these on our website and in other papers. The trends in data architecture will increasingly focus on semantic interoperability. When I say semantic interoperability, I think machine understandable IE: The machines understand the data that’s being passed between them. So what does data interoperability look like? Well, please excuse this slide. It’s a product, but the IDERA is that it’s showing applying semantics and data in a graph model we call the final product that’s created a Knowledge Graph. In knowledge graphs, what they do is normalize the structural heterogeneity of the contributing data sources, whether they be structured or unstructured, and harmonize the meaning or the semantics using unambiguous ontology models. Importantly, unambiguous formal ontology models are a critical enabler to the vision. I think that’s another key takeaway for people who are interested in what’s coming next. Knowledge graphs are interoperable and they connect to each other and they are machine understandable.

15:51

When you create them using W3C standards, Resource Description Framework and Web Ontology Language or Rdf and Owl. Perhaps subtle knowledge graphs are modular which facilitates sharing, reuse and maintainability. They can be physically distributed but logically interconnected and queryable using, for example, Federated Query Architecture. And this promotes decentralized data architectures. Distributed data models that are logically integrated and based on semantics are key enablers to the overall vision. We already do this for basically the enterprise or for a domain at a time.

17:37

Data Mesh

We can also start to see we have data publishers, data consumers, a catalogue and policies. This should start to remind us of something. Also here’s where each domain or product area can apply, and implement their architectures using Knowledge Graph. Strictly speaking, as long as the data product understands how to interpret queries and the semantics of those queries, then it really doesn’t matter what the back-end data is. In practice, we serialize and marshal the data in the Knowledge Graph because that provides other benefits. Here’s another abstraction and what we’re getting closer to is the good old service-oriented architecture where operationally data product providers are going to register their service, and their product descriptions in a catalog and then consumers are going to search that catalog for lookup and discovery and then finally they’re going to retrieve that data.

19:09

Also, the catalog itself can be a Knowledge Graph and arguably should be. Basically, the data mesh brings us multiple domains and it also brings us data products, but it’s basically revisiting the service-oriented architecture construct. The way forward is basically to summarize, we’ll see distributed data models that are logically connected and accessible as a seamless data model. The data can be distributed everywhere, but it’s logically accessible through the query language, which in our case would be the W3C SPARQL. This implies that we’re going to see increased adoption of machine-understandable semantics, better Federated query architectures and more data virtualization.

20:27

As we think about modern applications, we think about some key themes of how application development has changed over the past ten to 20 years. Right? There are a few key areas, such as the delivery of great experiences. In the past, applications were built mainly for organizations, and a small number of people. Now it’s very much about engaging a user, whether that be on a mobile device or even a laptop or some other mechanism.

21:30

Modern Application Development

Applications today need to be developed efficiently. We’ve got limited resources. We want to make sure we’re leveraging the skill sets of people on our team and they need to be deployed effectively. Right. We’ve got people using applications from often all over the globe, different types of devices. You want 100% uptime and you want flexibility in your deployment. Those needs of modern applications have also changed the needs and requirements for modern databases. In the past, legacy databases needed very structured data, ensuring asset transactions integrity. We had to make sure that it was very efficient in terms of how data was stored. While those things are still important, modern needs have expanded so much in terms of what a database needs to do, in terms of the number of users, scaling up, scaling down, delivering high performance, and being flexible to deliver applications in microservice-type architecture.

22:36

I’m going to talk to you about Couchbase Capella, which is our database as a service. It’s a distributed database, so data is automatically sharded and resulting in high availability for an application. We are a NoSQL database, but we’re storing in JSON, and we use SQL Plus as our query language. For those who know SQL, these both are very familiar.

As they start using Couchbase, we’re delivering high-performance storage clustering, managing all the replication, managing all the synchronization of data between applications and the cloud and IoT and mobile devices. So why do customers come to Couchbase? Kind of four key reasons that bubble up over and over again. One is performance. Couchbase is based on a memory-first architecture. We have a lot of customers who come to us and their current legacy databases just aren’t giving them the performance they need, whether that be a relational or even NoSQL database.

23:45

Modern Data Architecture Demands

They want flexibility. They want agility in how they build applications and evolve those applications. They don’t want to be locked into a rigid schema. They want strong mobile and IoT support, including offline availability and uptime and driving down the total cost of ownership. These are things we hear from our customers over and over again. You see here some of the key user profiles and use cases where we’re working with our customers. An example of a few of those real quick is FICO, one of the largest institutions in the finance industry in terms of managing credit card transactions and that type of information, they use Couchbase in terms of their fraud detection application. Carnival Cruise Line has a program called their Ocean Medallion Program that is used on ships. People are on the cruise ship and they have a little medallion to take with them as they go around the ship and do different activities, the carnival will present them with new options and ensure that they are getting a very rich personalized experience.

25:01

Application Architecture

Everyone on the ship is having a different experience, even though they’re on the same boat. LinkedIn uses couchbase for its caching. As you log into LinkedIn and you’re managing your profile, making updates to that is powered by Couchbase. Staples uses Couchbase for its product catalog with its B, two B shoppers, ensuring again, a personalized experience. That way people are coming in from different companies, different teams based upon their location and a whole bunch of other criteria. What they might be presented with as a catalog is very different from that of another person. And so who else is using Couchbase? Well over 30% of the Fortune 100 use Couch base on a daily basis for mission-critical applications. You can see that we’re used across a wide variety of different verticals. Let’s talk more about Couchbase architecture. If we think about a very simple application, right?

26:06

Modern Data Stack Architecture

You have your core functional requirements, you have a database underneath and you have infrastructure supporting that, right? In reality, what we’ve seen, and this has evolved over time, is that applications and microservices have moved to this concept where they might be using multiple databases to support all of the different functional requirements that they want to support. They might have a Caching product for speed, they might be using a relational database for transactions, they might be using a full-text search tool, they might be using an analytics platform as well, and then a mobile app. All of those things combine to solve specific problems. The challenge with that though is that by managing all of these different technologies underneath, it runs into a lot of problems in terms of managing the data, integrating the data, as well as just all the other operational aspects in terms of licensing and training and support and security.

27:12

Couchbase offers a different solution, which is a multimodal approach. Customers choose Couchbase because it’s very robust in terms of its broad ability to store and access data and support different use cases. So Couchbase has a built-in integrated cache. It stores data as JSON documents, as I mentioned earlier, uses SQL as our main query language, but supports full-text search and operational analytics and eventing all built in to support those different use cases. Automatically, as a distributed database, replicates data throughout the world and synchronizes that data out to mobile devices, even for offline use. All of that combined makes up the Couchbase data platform. What delivers to customers is the speed in terms of memory. First design high-powered caching that’s distributed flexibility in our JSON document data model and the multimodal data access familiarity of those who know SQL find it very easy to come on board with Couchbase, but still have the power of things like Acid transactions and so forth.

28:25

Data Architecture Scalability

Delivering speed and scalability in an elastic way makes Couchbase very affordable. We’ll get into that in a moment. In terms of the options for delivering consistency to customers, capella is our database as a service for those who are looking to self manage. We have our server product and we have our mobile and IoT offering which integrates to the back end cloud databases through our synchronization. Really quickly, just looking at some industry benchmarks here, what we’re looking at is the YCSB benchmark for Nosql comparing Coachbase to other Nosql databases in the industry. This was run by a third party, and what we’re looking at here is the cost it would take to do a billion operations. Workload A is inventory updates, read and write. Workload E is online shopping where it’s a lot more in memory processing. What you can see is the cost for the different players in the space is much higher than Couch based Capella.

29:37

You can see in terms of throughput and latency and cost savings, capella is ahead. Some real results to back this up. This data that you’re seeing here is from Tech Validate, which means that it’s a service where customers come in and publish their own data of how they’re working with Coachbase and others.

30:43

Data Analytics Architecture

What we’re starting to see as an ongoing trend is that smaller and smaller organizations want to get the same business value from analytics that everyone else is talking about. What we’re seeing is folks data analysts that maybe have tools like Excel, they have Power BI or Tableau, and they’re trying to get insights into the business as quickly as possible.

31:59

Enterprise Data Architecture

Of course, they’re running into bottlenecks, they’re running into barriers because we all know you need a robust data architecture if you’re going to make sense of complex data. As they go into this, they’re starting to realize that they need skills to ingest and model and transform and they’re trying to do that themselves. This role is becoming more and more common in organizations and it’s being dubbed the Analytics Engineer, essentially a data analyst that has acquired and built up the skills to do a lot of data engineering tasks. The challenge is, again, that the best practice. Data architectures, they’re highly complex. Again, a lot of the solutions, a lot of the software that’s available out there are designed for large enterprises. How does this one, analytics Engineer or two, or three be able to deploy these solutions that are designed to be managed by ten or 15 different people?

33:01

The typical solution for a lot of enterprise organizations is what’s known as the modern data stack. The modern data stack is a collection of different tools that are put together to provide insights for the organization. The challenge is, of course, this can be very time-consuming to build, right? There are a lot of different tools. As we all know, acquiring any one of these tools in an organization means that everyone needs to agree on it and we need to sign paperwork. It can take months just for a single tool to be able to be adopted in the organization. You’ll have to, of course, set them up, right? You have to set each one of these up and then you have to stitch them together. Oftentimes these tools don’t talk to one another, right? There’s going to be some custom integration that’s happening, bringing them together, trying to make them work together.

33:59

They lack holistic governance because again, none of the tools talk to each other. You don’t know who has access to what that can. Create some issues depending on GDPR or other regulatory compliance that might be happening in the organization, then orchestrating from the beginning all the way to the end can be very complex. What you’re starting to see here is that in order to deploy and maintain a solution like this, it really is going to require a large team of highly experienced, highly educated people. As I say that, I’m sure you can start to imagine the dollar signs going across my eyes like the old-school cartoons, right? This type of solution is extremely expensive to deliver, which means that it’s really only for large enterprises. Only large enterprises can have a team of ten to 15 master’s degree level engineers that are maintaining this.

35:01

Agile Data Architecture

So what is the solution here? Right? How do we move forward? We know that technology is becoming increasingly easier to access, right? Tools like Snowflake and Redshift and Synapse are out there, that are available, so we can go and log into a website and deploy in just a matter of minutes. We don’t need to go and get all of this hardware, put all this hardware together, and stitch that together anymore. We can do that just in the cloud. Technology is much easier to access. Still, only large enterprises can afford to deploy these because it still requires highly skilled engineering teams to do this, right? You still need ten to 15 people to interact with and code on a data lake or interact with a database like Snowflake because it still requires a lot of manual hand coding. The rest of us need now, in order for the smaller and smaller businesses to start and be able to deploy a robust data architecture, they need a solution that’s agile.

36:24

When I say agile, I mean something that’s really focused on business outcomes and delivering business outcomes quickly. Because that’s the end goal here, right? They need something that’s holistic, meaning not a bunch of tools that are stitched together, not a bunch of time being spent on training on 15 different platforms, but something that you can ingest, integrate and deliver data in a very simple solution. And also something that’s easy to use. Again, you can’t have five to ten master’s degree-level people on your team. If you’re a small to medium-sized business, it’s just too expensive. You need something that’s easier to use. That’s really where our solution comes in. Time Extender is a low-code, no-code interface that helps any size business deliver real outcomes through data solutions ten times faster. It reduces costs by 70% to 80%. Again, a lot less time coding means a lot more time delivering insights.

37:31

Modern Data Platform

Time Extender delivers these solutions in three distinct layers. The first layer is what we refer to as the operational data exchange. Now, this is typically considered a data lake. Doesn’t have to be a data Lake, but this is where you ingest that data into the initial layer, ingest that raw data. The second layer is the modern data warehouse, and this is where, of course, you’re integrating that data together, making it available in a clean, modeled way that’s easier to access for the organization. The third layer is what we call the semantic models. Being able to take that large data warehouse, and break it out into small, relevant data models that are relevant for different departments, you might have one for sales, one for the purchasing department, one for the finance department, but making that as easy as possible to access. Again, having this in a low code no code platform means that a data analyst can now achieve this type of architecture and deliver that for their organization and start delivering real, true business outcomes with data.

38:41

One of the key outcomes of Time Extender as well as business logic. How this data comes together is abstracted from the data storage layer. If you choose to start and build this on a SQL Server, you can build all the business logic out and have a solution. Later on, say, what, I want to switch to Data Lake and Snowflake. Well, in Time Extender, that’s just a checkbox, and all of the code underlying code can be redeployed within just a few seconds. Now, because Time Extender keeps track of all the metadata, that means that things like documentation and data lineage are just one click away. Not only that, the orchestration, because Time Extender maintains all the dependencies for each table in the entire solution, orchestration is intelligent. Not only that, it’s reordered for efficiency. That means you’re going to have the fastest load times possible.

39:39

Now, personally, I’ve helped tons of customers obtain real business outcomes incredibly fast using Time Extender, even with a team of just one to two people. It’s really incredible to see these smaller organizations being able to achieve these real business outcomes. I’ve seen what they go through, and it’s tough, it’s not easy. Having a solution that makes it a lot easier to achieve this and seeing the reactions from people is just really exciting.

Topics : Data Fabric,Data Governance,Data Modeling,

Products : ER/Studio Data Architect,ER/Studio Data Architect Professional,ER/Studio Enterprise Team Edition,ER/Studio Team Server Core,